Ok, so let me get this straight. From my recent experience, we can’t get an automated chicken door to work right, but we want to give machines independent authority to kill people?

This doesn’t strike me as a good idea.

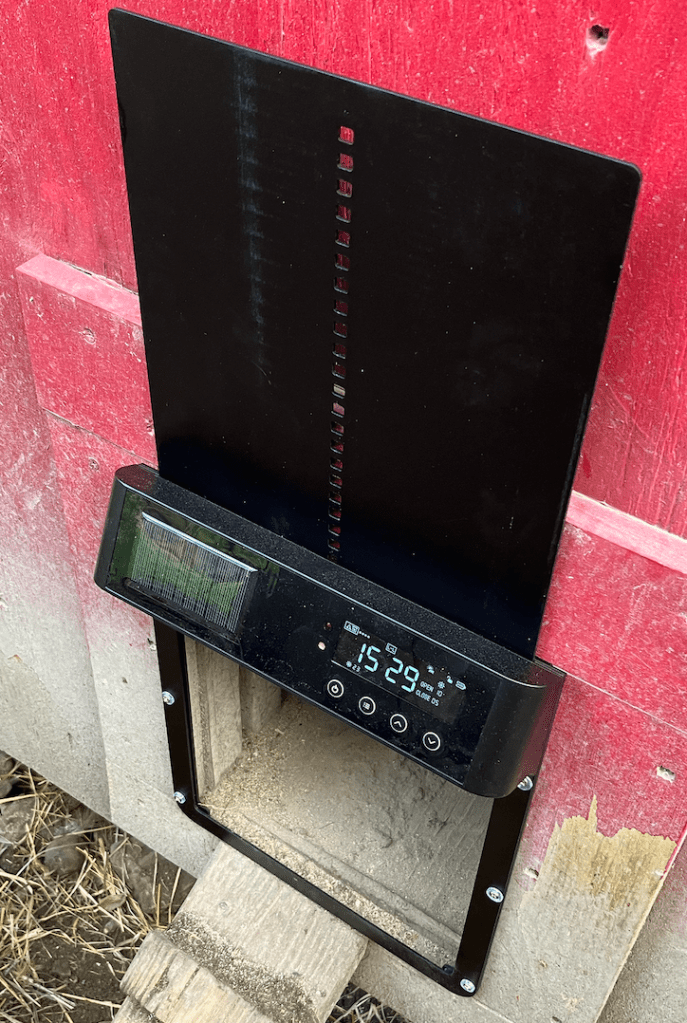

So, I came across this automated chicken door on the Beast. It looked like a grand idea- a door that automatically opens in the daylight and closes at sunset. A great concept; you must lock up the birds at night because that’s when the other beasts come around to get that delicious succulent chicken flesh. If you keep hens for eggs, this is undesirable. Nothing like going to the hen house and finding one of the girls in pieces because some little furry wretch decided he/she needed a snack.

Of course, opening and closing the chicken house door is an extra step in your day. It’s kind of trivial, but it can be annoying. For example, at night in a rainstorm. Or in the morning when you’d rather take your time getting outside. When you keep animals, this stuff isn’t an option. The beasts depend upon you, and you have to do your bit. Also, someone else must do this if you have the silly notion of going on vacation. It’s bad enough coordinating care for your animals. It’s worse to explain all the extra steps and inconvenience to a neighbor.

So, this door looked great. It would surely work, right? It has two functions. Go up via a signal from a very simple photo-electric cell and then go down. This isn’t rocket science.

I installed the door, it was straightforward. Charge it up, install six screws, then walk away. For three days, it was OK. On the morning of the fourth day, I went outside late, and the chickens were still cooped up. What the hell, I thought. I hit the manual “up” button, and the problem was solved. That night, the door went down. All good. The very next morning, same deal. So, I changed the morning setting to the timer function.

Nah, that didn’t help. Then, the door started randomly cycling up and down. Later, when I checked before sunset, the door went down prematurely and stayed down. I had to gather my distraught birds and put them in the coop. They get pissed when they can’t roost at night. This is why such an automatic door is good- chickens roost around sunset, and the door closes.

This one didn’t work. I returned it, thinking maybe I had a bad unit. The new one showed up a few days later.

Guys, this one didn’t even last a day. To make matters worse, it wouldn’t cycle manually, either.

What a pain.

And this is a stupid chicken door, operating from a zero or a one. And it can’t be trusted to do that one thing and to do it well? And we’re talking about letting overgrown chicken doors independently launch Hellfire missiles?

Since when is this a good idea?

At present, we are letting some brilliant/stupid people dictate where we’re headed as a society. What do I mean by brilliant/stupid?

Mr. Musk is a great example. He is a brilliant assembler of teams to achieve a singular goal. For example, Space X. He had an idea. “I want to build reusable spacecraft to radically lower the price and feasibility of space access.” With a singular drive, he surrounded himself with people to accomplish that goal. He succeeded, and I admired this accomplishment.

He didn’t have the common sense to stop there. This is where the stupid part comes in. He made the assumption that because he was amazing at assembling teams (Tesla is another great example), he was brilliant in ALL areas, not just his specialty. This is also known as hubris, the stupidity that destroys brilliance.

Hubris, definition.

“Excessive pride, or self-confidence.”

Have you ever noticed that people can be very confident about demonstrably rubbish claims? The world is chock-full of such examples. I won’t bother to list them.

I posit that our current headlong rush to an AI-driven world is spearheaded by brilliant people without common sense. People who build chicken doors that work well in the lab but fail spectacularly under real-world conditions. It was easy to see when the chicken door started to glitch and easy to dismiss the first warnings. Finally, the chicken door failed completely and obviously.

Who will see the subtle glitch in an autonomous combat system, which will be maintained by an eighteen-year-old who met the minimum standard of an Army maintenance course? I can how this will play out. An example, based upon past experience.

“Hey, Sergeant. I pressed F18, and the screen blanked.”

“So?”

“Well, it was weird.”

“Does it fuckin’ work now?”

“Yeah. I reset it, and now it looks OK.”

The sergeant looks up from his iPhone, where he has been looking at porn. He speaks.

“Why are you bothering me with this shit? Fuckin’ thing works, right?”

“I guess…”

“Get the fuck out of here before I go lookin’ for a Late Man.”

Private Whoever skedaddles, quickly (BTW, “Late Man” in a maintenance unit is the soldier picked to work on a problematic machine while all of his peers go fuck off. It’s an undesirable assignment for a young troop and is frequently a disciplinary measure).

War machine and its quirks forgotten, Private Whoever takes off to the barracks and gets drunk. His sergeant does the same. Midnight shift launches said vehicle, which performs to specs. Until it doesn’t, and it’s loaded with high explosives while loitering over a battlefield somewhere. Machine malfunctions, with predictably fatal results to someone who didn’t have to die.

We can even bump this up a notch and give said killing machine The Big One, the Silverfish, or nuclear weapons. A simple flaw in the software or hardware, and it ignites a crisis.

All caused by a brilliant/stupid person who had an idea. “Wouldn’t it be cool…” So, they do it, giving zero fucks about any possible third-order effects, and lacking any awareness of real-world conditions. Someone takes their idea, sells it to the lowest bidder, and off we go.

I’m hardly a Luddite. I recognize that AI has enormous potential. My fiction is full of the wonders and horrors of such a world. But damn it, before it is fielded in critical areas, you need to make sure it works, every single time.

This was my beef with the M16 rifle, which has become a very reliable battle rifle. But, it took sixty years of service and numerous iterations to become what it is today. In the beginning, it started as a great idea that really sucked in the field under imperfect conditions. Soldiers in Vietnam died because of its early flaws, due in large part to hasty fielding under wartime conditions. Yes, it is a very good rifle today, and if the soldier does his or her part, it will work every time you pull the trigger. But it sure as hell didn’t start that way.

No, the US military took an unproven novel design and dumped it onto a conscript army familiar with the M1 Garand and its cousin, the M14. Both were far more forgiving of field conditions than the M16. So, the kids in the rice paddies treated the M16 like the M1 or M14, and bad things happened. As a result, the M16’s design went through numerous iterations, and the military changed its culture and training to allow for the weaknesses of the black rifle. Ultimately, it became a good battle rifle, but the process was painful, to say the least.

And that was a simple assault rifle, not a flying computer loaded with semi-autonomous weapons.

Did you get that part about reliability? Right now, it’s simply not there. If a machine doesn’t do exactly what it’s supposed to, every time and under all conditions, it is worthless.

Worse, it defeats your stated purpose by doing the opposite of the desired intent.

Don’t let psycho chicken doors operate autonomous weapons. It’s common sense.

Common sense is not common. As a society, we are heaping dump trucks full of money and power on those who have none.

Give it some thought.